Sauron Self-Alerting

Overview

Sauron offers a set of pre-built alerting rules concerning the health of the Sauron instance itself. These self-alerts are defined in Grafana and routed to Sauron contacts/owners via Alertmanager. However, you can configure these alerts, including whether or not to enable them using the APIs mentioned below.

Enabling/Disabling the Self-Alerting Feature

The overall Self-Alerting feature can be disabled or enabled like this:

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/actions?action=disable

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/actions?action=enable

And to view the enabled status:

curl -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/status

{"enabled":true}

A Sauron instance is created out of the box with Self-Alerting enabled.

Add custom email address for Self-Alerting

This api will add custom email address to self-alerting which is used in Alertmanager by smtp-receiver to send emails, if toEmails is not provided then contact-email will be used to send the mail.

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/

toEmails?emails=abc@gmail.com

For multiple emails:

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/

toEmails?emails=abc@gmail.com,xyz@gmail.com

Listing Available Alerts

To list the name and description of available alerts:

curl -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts

[{"description":"Alert on the Prometheus head series dropping greatly","name":"prometheusseriesdropping"}]

Enabling/Disabling Specific Alerts

To disable or enable a specific alert, for example, the prometheusseriesdropping alert:

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts/prometheusseriesdropping/actions?action=disable

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts/prometheusseriesdropping/actions?action=enable

And to view the enabled status:

curl -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts/prometheusseriesdropping/status

{"enabled":true}

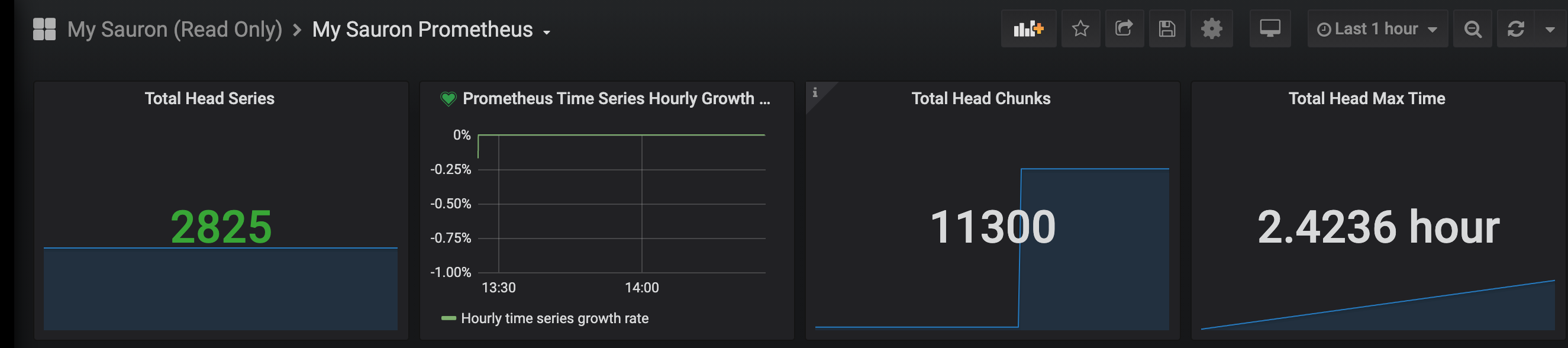

Once you enable an alert, it'll appear activated in Grafana within a minute or two. You'll be able to find the alert in

the My Dashboard related to the component that the alert deals with. For example, for prometheusseriesdropping,

navigate to the "My Prometheus" Dashboard. An active alert on a panel is indicated by a heart to the left of

the panel name:

Tweaking Configuration of Specific Alerts

We have limited support for modifying the parameters of the Sauron Self-Alerts. The alert parameters that can

be modified are:

* severity: an arbitrary string that you can set as needed, based on your Alertmanager configuration (see below)

* forMinutes: how many minutes the condition needs to fail for before the alert fires

* threshold: the threshold value of the alert condition

Again, using the prometheusseriesdropping alert as an example, to fetch the current configuration:

curl -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts/prometheusseriesdropping

{"forMinutes":15,"severity":"warning","threshold":"-80"}

This particular alert fires on an 80% drop in metrics. If we want to change this to a 50% drop instead:

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting/alerts/prometheusseriesdropping -d '{"severity": "warning", "forMinutes": 15, "threshold": "-50.0"}'

{"forMinutes":15,"severity":"warning","threshold":"-50.0"}

Using Severity in Alertmanager

As mentioned above, you can set the Grafana alert severity of the Sauron Self-Alerts to whatever values you want. A value of "warning" is the default for Sauron Self-Alerts. But it's possible for you to configure more complex Alertmanager configurations that actually map the severity of Grafana alerts to the severity of Ocean alerts, if you choose. For example, an Alertmanager configuration like this will pass the severity of a Grafana Alert onto Ocean as-is, defaulting to a value of "warning":

receivers:

- name: Testing

webhook_configs:

- xxxxxxxxxxx

severity: '{{ if .CommonLabels.severity }}{{ .CommonLabels.severity| toLower }}{{ else }}warning{{ end }}'

Enabling/Disabling Future Self-Alerts By Default

The Sauron team expects to add more Self-Alerts over time. Once added, a new alert will immediately appear

via a call to /v1/selfalerting/alerts (mentioned above). But whether you want these new alerts to be enabled

when they become available (which is the default behavior) is up to you. If you'd like to have these future alerts disabled

when they become available, you can configure that like this:

curl -XPUT -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting -d '{"enableNewAlerts": false}'

To view whether this is already set:

curl -u sauron:mypassword https://api.handu-phx.handu.developers.oracledx.com/v1/selfalerting

{"enableNewAlerts":false}

Grafana Notification Channel for Alertmanager

Your Grafana is configured out of the box with a Notification Channel for Alertmanager. This is the Notification Channel used by the Sauron Self-Alerts, but you are free to use it for your own Grafana alerts as well.

Alertmanager Configuration

Since Sauron Self-Alerts are routed to Alertmanager, they require an Alertmanager configuration on your part in order to route to something like Ocean, Slack, or email. The following are complete examples of configuring routing to:

If you already have an Alertmanager route and receiver configured, any Self-Alerts will enter that existing receiver stream. If instead you wish to

separate out Self-Alerts from your ordinary alerts, you can use the

mysauron label to do this. Let's say you have a "Service-Production" receiver

configured to route to Ocean:

route:

receiver: Service-Production

group_by: ['alertname']

group_wait: 30s

group_interval: 1m

repeat_interval: 3m

receivers:

- name: Service-Production

webhook_configs:

- xxxxxxxxxxxxxxxxxx

and you want to configure Self-Alerts to be sent to Slack instead of Ocean. You can do that by adding

a Slack receiver (abbreviated example, see here for a

full example of a Slack configuration), and a route that sends alerts with the mysauron label to that receiver:

global:

smtp_smarthost: smtp.us-ashburn-1.oraclecloud.com:587

smtp_from: sauron-alert@sauron.us-ashburn-1.oracledx.com

smtp_auth_username: ocid1.user.oc1.xxxxxxxxxx.com

smtp_auth_password: somepasswd

route:

receiver: Service-Production

group_by: ['alertname']

group_wait: 30s

group_interval: 1m

repeat_interval: 3m

routes:

- receiver: Slack-Receiver

group_by: ['alertname']

match_re:

mysauron: .+

receivers:

- name: Service-Production

webhook_configs:

- xxxxxxxxxxxxxxxxxx

- name: Slack-Receiver

slack_configs:

- icon_url: https://alertmanager.handu-phx.handu.developers.oracledx.com

send_resolved: true

...

Prometheus Alerts

In this section, we will list proactive action on Prometheus which can be taken by customer and reduce the noise by itself.

Prometheus Disk Usage High

Default Prometheus storage will be 500 GB per instance and we provide highly available Prometheus-as-a-service which requires two instances. This alert will be triggered whenever Prometheus disk usage reached more than 90% in last 15 minutes.

Possible Action

- Review your Prometheus configuration. There could be a possibility that you are scraping aggressively, consider increasing the scrape interval.

- Your metrics might have high cardinality, it's causing so much disk usage. You can open your Prometheus status page and look for highest cardinality Labels and drop those labels that have higher than 1,000 cardinalities. It is advised to keep just 1 label per metric name that has more than 1,000 cardinalities.

- If the above actions don't work for you, consider to shorten the retention period. You will need to have the Sauron team to change the retention period for you.

If none of the options work for you, please contact Sauron team.

My Sauron - Time Series Dropping

We have configured this alert when Prometheus TSDB Head Series has dropped by 80% in last 1 hour. This means there is some issue at customer end, their scrape either not pushing the data or some scrape configuration changes which consequence drop in seeding/pulling/scarping metric data.

Possible Action

This only needs to be take care by the customer until and unless there were some major outage at backend. Customer can recheck its scrape configuration, server configuration, connectivity to Prometheus/PushProx/Shuttleproxy servers.

OpenSearch Alerts

In this section, we will list proactive action on OpenSearch which can be taken by the customer and reduce the noise by itself.

OpenSearch Disk Usage High

Default threshold will be set 70%. Once it crossed 75%, then Sauron team will get the alert.

Possible Action

- Please check whether

DEBUGmode is enabled or not in any of your apps. If yes, disable it. - If you have indices that named differently, such as

- index name without timestamp

- index name with timestamp that is outside the supported 3 timestamp-patterns please delete all those indices and fix the log shipper client settings to use one of the 3 supported index patterns:

-%{+yyyy.MM.dd}-%{+yyyy-MM-dd}-%{+yyyy_MM_dd}

- Optimize the data that is being sent to OpenSearch. You can follow the steps described in the documentation about tuning for disk usage.

- If you are sending logs/metrics from different regions to a single Sauron endpoint (causing increased clients and traffic), Please request new Sauron instances in the specific regions.

- Consider shortening the retention period if possible. You will need to have the Sauron team to change the retention period for you.

- It might be possible that logs ingestion actually grown due to increased number of clients. Then raise the ticket on support channel to increase the OpenSearch disk size.

OpenSearch Shard Size Too Large

Size of some OpenSearch shards has been reached to 50 GB and need to be lowered to 30 GB.

Possible Action

- Please consider increasing the shards count of the indices.

- Try to filter out the unnecessary messages from the client-end.

- Split one big index into several smaller indices.

Alertmanager Alerts

Alertmanager Notification Failed

Alertmanager tried to send an alert notification through a customer-configured receiver, but that attempt ended in failure, and the notification was not sent.

Possible Action

When Alertmanager fails to send an alert notification, the Alertmanager logs will normally include some information about why the attempt failed. You can use the Sauron API to fetch the logs.

Some common failure modes are:

- SMTP authentication failed:

my-receiver-name/email[0]: notify retry canceled after 16 attempts: *smtp.plainAuth auth: 535 Authentication credentials invalid

Use the Sauron API to update your Alertmanager configuration to specify valid SMTP credentials.

- OCEAN authentication failed:

my-receiver-name/webhook[0]: notify retry canceled due to unrecoverable error after 1 attempts: unexpected status code 401: https://oceanclient.ocs.oraclecloud.com/api/v1/webhooks/json/prometheus-alertmanager: <html>\n<head>\n<meta http-equiv=\"Content-Type\" content=\"text/html;charset=ISO-8859-1\"/>\n<title>Error 401 Unauthorized</title>\n</head>\n<body><h2>HTTP ERROR 401 Unauthorized</h2>\n<table>\n<tr><th>URI:</th><td>/api/v1/webhooks/json/prometheus-alertmanager</td></tr>\n<tr><th>STATUS:</th><td>401</td></tr>\n<tr><th>MESSAGE:</th><td>Unauthorized</td></tr>\n<tr><th>SERVLET:</th><td>jersey</td></tr>\n</table>\n\n</body>\n</html>\n

Request a new OCEAN token, and use the Sauron API to update your Alertmanager configuration with the new token. See the OCEAN External Webhook Onboarding Guide for more information.

- Slack authentication failed:

my-receiver-name/slack[0]: notify retry canceled due to unrecoverable error after 1 attempts: channel \"my-channel\": unexpected status code 400: invalid_token

Use the Sauron API to update your Alertmanager configuration to specify valid Slack credentials.

- Slack reports channel not found:

my-receiver-name/slack[0]: notify retry canceled due to unrecoverable error after 1 attempts: channel \"my-channel\": unexpected status code 404: channel_not_found

Use the Sauron API to update your Alertmanager configuration to specify a valid Slack channel.

- Slack reports channel has been archived:

my-receiver-name/slack[0]: notify retry canceled due to unrecoverable error after 1 attempts: channel \"#my-archived-channel\": unexpected status code 410: channel_is_archived

Either unarchive the channel in Slack, or use the Sauron API to update your Alertmanager configuration to specify a channel that is not archived.

- Unable to reach Slack:

my-receiver-name/slack[0]: notify retry canceled after 17 attempts: Post \"<redacted>\": proxyconnect tcp: dial tcp :0: connect: connection refused

Check the Slack URL, and ensure no proxy_url was supplied in the

Alertmanager configuration.

OCEAN Token Expiring

An OCEAN token in the customer-supplied Alertmanager configuration has expired, or will expire soon.

Possible Action

Get a new OCEAN token, and use the Sauron API to update the Alertmanager configuration to use the new token. See the OCEAN External Webhook Onboarding Guide for information about how to get a new token.